CPU Scheduling

Tom Kelliher, CS 311

Mar. 2, 2012

Announcements:

From last time:

- Processes and threads; context switching.

Outline:

- Traditional process scheduling.

- Comparison criteria.

- Priority functions.

- Thread scheduling.

- Multiprocessor scheduling issues.

- Gantt chart examples.

Assignment:

Motivation:

- Traditionally: keep the CPU busy.

- Now:

- Promote modular design.

- Increase throughput (a multi-threaded server).

What are the three schedulers, and how do they function?

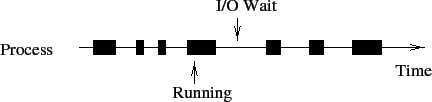

Model of a process: CPU-I/O burst cycles:

Distribution of bursts.

CPU bursts terminate due to:

- Process waits on event (blocked, suspended).

- Process' quantum expires (back to ready Q).

- Non-Preemptive scheduling.

Context switches occur:

- Running process terminates.

- Running process blocks.

I.e., running process controls the show.

New process takes over if running process blocks.

- Preemptive scheduling.

Principle: Highest priority ready process runs.

Quantum timers come into play.

Additional context switches:

- Higher priority process changes state from blocked to ready,

preempting running process.

- Quantum expires (kernel preempts).

Higher overhead.

- Selective preemptive scheduling.

User oriented, performance related criteria.

- Response time -- time from submission of request to receipt of first

output.

- Turnaround time -- time from submission of request to its

completion.

- Waiting time -- turnaround time minus CPU time; time spent waiting

for resources.

- Deadlines -- When deadlines are specified, percentage of deadlines

which are met.

User oriented, other criteria

- Predictability -- the same job should run in about the same amount

of time and cost regardless of other system activity.

System oriented, performance related criteria

- Throughput -- number of processes completed per unit of time.

- CPU utilization -- percentage of time CPU is performing actual work.

System oriented, other criteria

- Fairness -- processes should generally be treated the same, with no

process starving.

- Priority enforcement -- when priorities are assigned, they should be

adhered to.

- Balancing Resources -- keep all system resources busy, adjust

priorities accordingly. This can come in at the long-term scheduler level.

- CPU time -- usually the most important factor

- memory requirements -- a major criterion in batch systems; in

timesharing systems give a good measure of swapping overhead

- wall time -- important for process ``aging'' -- increase priority

of older jobs.

- total required CPU time -- specify max runtime for job (batch) or

take some average of previous runtimes.

- external priorities -- batch, interactive, realtime, VIP, etc. Let

user pick priority and charge for higher priorities.

- system load -- increasing quantum may offset swapping overhead,

increasing utilization. Continue giving good response to high priority

jobs, making others suffer. Graceful degradation.

Implemented using queues or priority queues.

- FCFS, FIFO -- non-preemptive. Run oldest process. Standard batch

priority function

- Implemented with a simple queue for the ready Q

- New jobs, jobs previously in wait or running state put at end of

ready Q

- Next job to run taken from head of ready Q

- Priority function: time in ready Q

- LIFO -- non-preemptive. Run newest process. Not real useful.

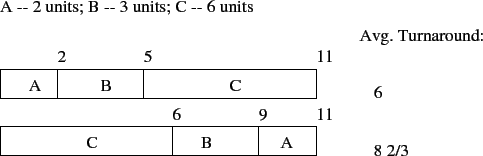

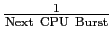

- SJF -- shortest job first. Non-preemptive. Run process with

shortest required CPU time.

- Time is time of next CPU burst.

- Implement with priority Q

- Estimate of next CPU burst:

where  is the estimated time and

is the estimated time and  is the actual time.

is the actual time.  is an exponential average of history.

is an exponential average of history.

- Priority function:

Provably optimal from turnaround/waiting point of view:

- SRT -- (shortest remaining time) preemptive version of SJF.

- RR -- (round robin) preemptive FCFS with a time quantum limitation.

Used in time sharing systems.

- Uses FCFS's priority function

- Additional factor in decision epoch: expiration of quantum timer

- Multi-level queues -- prioritized set of queues,

to

to  .

.

- Processes in queue

always have priority over queues

always have priority over queues  .

.

- A process remains within the same queue.

- Each queue may have its own scheduling algorithm.

- Alternative: each queue gets some fixed slice of the total CPU

cycles.

- Example: Queue for interactive jobs, RR scheduling; queue for

batch jobs, FCFS.

- Multi-level feedback queues -- similar to multi-level queues, except

that a process can move between different queues, based upon CPU usage.

- Must specify rules for moving the processes between queues.

- Ordinarily, lower priority queues have greater quantums, etc.

- Linux uses this method, with a 100ms quantum for all

queues. 141 priorities and run queues. A limited amount of dynamicism

for non-realtime tasks. Higher priority tasks have longer quanta, but

get ``expired,'' preventing starvation.

Suppose the following jobs arrive for processing at the times indicated and

run with the specified CPU bursts (at the end of a burst a process waits

for one time unit on a resource). Assume that a just-created job enters

the ready queue after any job entering the ready queue from the wait queue.

| Job |

Arrival Time |

CPU Bursts |

| 1 |

0 |

1 2 |

| 2 |

1 |

1 3 |

| 3 |

2 |

1 1 |

Calculate the average turnaround time for each of the scheduling

disciplines listed:

- First Come First Served.

- Shortest Remaining Time (assume that the running time

is the sum of the CPU bursts).

- Round robin with a quantum of 1.

Don't forget the ``bubble'' cycles (where no process is runnable), if

required.

Kernel-level (system scope) vs. user-level (process scope) threads.

pthread possibilities (implementation dependent):

- Quantum allocation.

- Process scope thread priorities; starvation.

- Process scope threads with same priority: FIFO (no preemption) or RR

(preemption) algorithms available.

- Symmetric Multiprocessing vs. asymmetric multiprocessing:

or

or  run queues.

run queues.

- Processor affinity: maximize cache hit rates vs. load balancing

vs. specialized devices attached to a single CPU.

- Hyperthreading to reduce memory stall-forced CPU idling.

- Virtualization: When a process quantum on a guest OS isn't all it's

cracked up to be.

Thomas P. Kelliher

2012-03-01

Tom Kelliher