Processes and Threads

Tom Kelliher, CS 311

Feb. 20, 2012

Announcements:

Exam in one week.

From last time:

- Adding a syscall to the kernel.

Outline:

- Syscall assignment.

- Processes.

- Threads.

- Program in execution.

- Serial, ordered execution within a single process. (Contrast task with multiple threads.)

- ``Parallel'' unordered execution between processes.

Three issues to address

- Specification and implementation of processes -- the issue of

concurrency (raises the issue of the primitive operations).

- Resolution of competition for resources: CPU, memory, I/O

devices, etc.

- Provision for communication between processes.

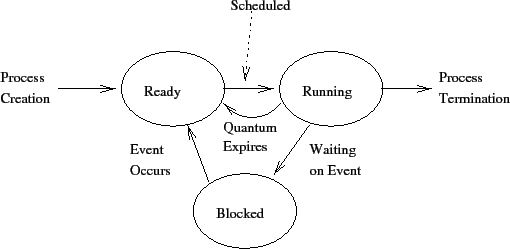

Three possible states for a process:

- Running -- currently being executed by the processor

- Ready -- waiting to get the processor

- Blocked (waiting) -- waiting for an event to occur: I/O

completion, signal, etc. (Suspended -- ready to run but not eligible.)

How many in each state?

Kept in a process control block (PCB) for each process:

- Code (possibly shared among processes).

- Execution stack -- stack frames.

- CPU state -- general purpose registers, PC, status register, etc.

- Heap -- dynamically allocated storage.

- State -- running, ready, blocked, zombie, etc.

- Scheduling information -- priority, total CPU time, wall time, last

burst, etc.

- Memory management -- page, segment tables.

- I/O status -- devices allocated, open files, pending I/O requests,

postponed resource requests (deadlock avoidance).

- Accounting -- owner, CPU time, disk usage, parent, child processes,

etc.

Contrast program.

PCB updated during context switches (kernel in control).

Should a process be able to manipulate its PCB?

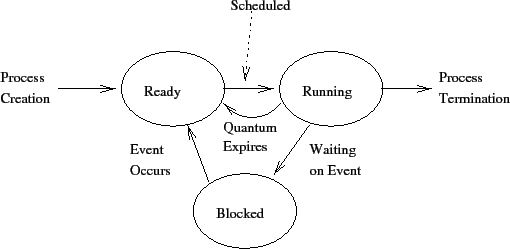

Determination of which process to run next (CPU scheduling).

Multiple queues for holding processes:

- Ready queue -- priority order.

- I/O queues -- request order.

Consider a disk write:

- Syscall.

- Schedule the write.

- Modify PCB state, move to I/O queue.

- Call short term scheduler to perform context switch.

Is it necessary to wait on a disk write?

- Event queues -- waiting on child completion, sleeping on timer, waiting

for request (inetd).

Three types of schedulers:

- Long term scheduler.

- Medium term scheduler.

- Short term (CPU) scheduler.

Determines overall job mix:

- Balance of I/O, CPU bound jobs.

- Attempts to maximize CPU utilization, throughput, or some other

measure.

- Runs infrequently.

Cleans up after poor long term scheduler decisions:

- Over-committed memory -- thrashing.

- Determines candidate processes for suspending and paging out.

- Decreases degree of multiprogramming.

- Runs only when needed.

Decides which process to run next:

- Picks among processes in ready queue.

- Priority function.

- Runs frequently -- must be efficient.

Time line schematic:

Parent, child.

Where does the child's resources come from? By ``resources'' we mean:

- Stack.

- Heap.

- Code.

- Environment -- environment variables, open files, devices, etc.

Design questions:

- New text, same text?

- Make a copy of the memory areas? (Expensive.)

- Copy the environment?

- How are open files handled?

Solutions to the ``copy the parent's address space'' problem:

- Copy on write -- Mark all parent's pages read only and shared by

parent & child. On any attempted write to such a page, make a copy and

assign it to child. Fix page tables.

- vfork -- No copying at all. It is assumed that child will

perform an exec, which provides a private address space.

Heavyweight process -- expensive context switch.

Thread:

- Lightweight process.

- Consist of PC, general purpose register state, stack.

- Shares code, heap, resources with peer threads.

- Easy context switches.

Task: peer threads, shared memory and resources.

Can peer threads scribble over each other?

What about non-peer threads?

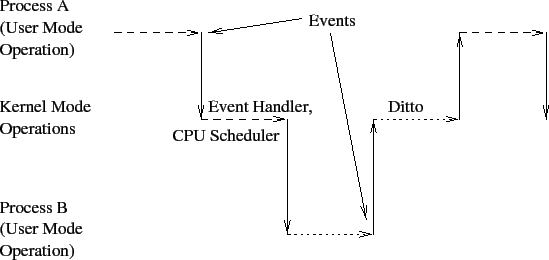

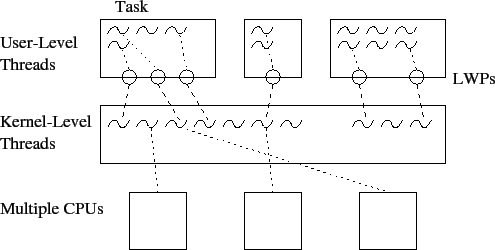

User-level threads:

- Implemented in user-level libraries; no system calls.

- Kernel only knows about the task.

- Threads schedule themselves within task.

- Advantage: fast context switch.

- Disadvantages:

- Unbalanced kernel level scheduling.

- If one thread blocks on a system call, peer threads are also

blocked.

Kernel-level threads:

- Kernel knows of individual threads.

- Advantage: If a thread blocks, its peers can still proceed.

- Disadvantage: Slower context switch (kernel involved).

How do threads compare to processes?

- Context switch time.

- Shared data space. (Improved throughput for file server: shared data,

quicker response.)

User-level threads multiplexed upon lightweight processes:

Basics: send(), receive() primitives.

Design Issues:

- Link establishment mechanisms:

- Direct or indirect naming.

- Circuit or no circuit.

- More than two processes per link (multicasting).

- Link buffering:

- Zero capacity.

- Bounded capacity.

- Infinite capacity.

- Variable- or fixed-size messages.

- Unidirectional or bidirectional links (symmetry).

- Resolving lost messages.

- Resolving out-of-order messages.

- Resolving duplicated messages.

Resources owned by kernel.

Messages kept in a queue.

Assume:

- Only allocating process may execute receive.

- Any process (including ``owner'') may send.

- Variable-sized messages.

- Infinite capacity.

Primitives:

- int AllocateMB(void)

- int Send(int mb, char* message)

- int Receive(int mb, char* message)

- int FreeMB(int mb)

Consider:

Process1()

{

...

S1;

...

}

Process2()

{

...

S2;

...

}

How can we guarantee that S1 executes before S2?

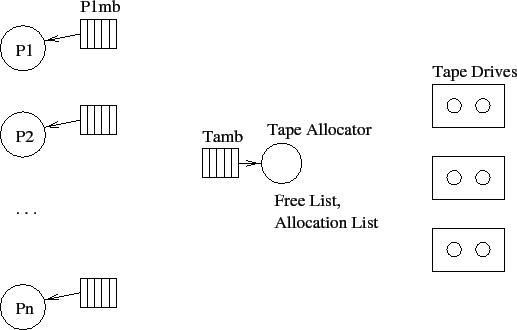

The situation:

Tape allocator process:

initialize();

while (1)

{

Receive(Tamb, message);

if (message is a request)

if (there are enough tape drives)

for each tape drive being allocated

{

fork a handler daemon;

send daemon mb # in message to requesting process;

update lists;

}

else

send a rejection message;

else if (message is a return)

{

update lists;

send an ack message;

}

else

ignore illegal messages;

}

Summary of user process actions:

- Send request to tape allocator.

- Receive message back giving mailbox(es) to use in communicating with

tape drive(s).

- Start sending/receiving with tape drive daemon(s).

- Close tape drives.

- Send message to tape allocator returning tape drive(s).

Thomas P. Kelliher

2012-02-19

Tom Kelliher